If you’re here, chances are you enjoy spending time online. The Internet has had a massive influence on our lives, no doubt. It’s one of the biggest inventions on the planet.

The Internet has influenced e-commerce, communication, academics, and every facet of our lives. It is estimated that approximately 2 million emails are sent, 6000 tweets are tweeted, and over 40,000 Google search queries are sent via the Internet every second.

But even though we know the Internet has influenced our lives greatly, how many people understand how the story began? Who founded the Internet?

Today, people spend most of their time surfing the Internet for information, shopping online, or interacting with friends on social media. But how did we arrive at this point? That’s the question.

Here’s a brief history of the Internet, detailing historical projects, inventors, dates, inventions, and more. After reading this, you’ll understand how it started, including those who dedicated their time to make the Internet a reality. Read on!

Why The Internet Was Created – The Early Motives

There were reasons for the creation of the internet. The U.S. government wanted researchers to work with ease. The government wanted researchers to collaborate and share information without any shortcomings.

As you already know, information sharing was a big challenge for researchers in the 60s. Computers used for research work were mounted at specific sites. Thus, researchers had to travel to the location to use them.

But besides the problem of the Internet’s nonexistence, computers back then were also super large and immobile.

Researchers were only able to collaborate and share information via magnetic computer tapes sent via postal system. They store digital information on magnetic tapes and transfer them to colleagues.

A Handy Tip: The first magnetic tape used to record computer data was in 1951 and on the UNIVAC I.

The Cold War was another historical event that spurred the creation of the Internet. But how did the war force the hand of the researchers, and the government to embark on this project?

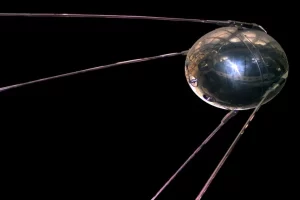

When the Soviet Union launched the Sputnik satellite (picture above) during the war, America and its allies became concerned.

The Sputnik was the first man-made satellite to orbit earth. It was launched on October 4, 1957, at approximately 7:28 pm. The satellite weighed 85 kilograms and was the size of a basketball.

The United States Defense Department was eager to keep the lines of communication between troops open in the wake of a nuclear attack. They feared the enemies (Soviet Union) could spy on them and launch a nuclear attack at any time.

The top brass of the U.S. Defense Department wanted information dissemination to continue should a nuclear war ever happen.

However, the launching of the Sputnik satellite didn’t only kick-start a space race. It also gave the United States government a reason to start thinking critically about science and technology.

The United States government funded numerous research agencies in 1958. One of them was the ARPA (Advanced Research Project Agency).

ARPA was a research project under the U.S. Defense Department in computer science. It served as a platform for researchers to share their findings, information, and communicate on research projects.

So, without ARPA, the Internet wouldn’t have come into existence. By the way, one of the agency’s directors, J.C.R Licklider, conceived the idea of how the internet would look like.

Official Birthday Of The Internet

The Internet came into existence on January 1, 1983. But before the big breakthrough, it wasn’t possible for computer networks to communicate. A standard way for computers to communicate was nonexistent.

However, the Internet changed everything. The Transfer Control Protocol/internetwork Protocol (TCP/IP) was formed, and it ushered in a new means of communication.

The Transfer Control Protocol and Internetwork Protocol made it possible for various computers to interact.

On January 1, 1983, Defense Data Network and ARPANET changed to the TCP/IP standard. It was a history period where all networks got connected by one universal language.

The Internet Founding Fathers

Who invented the Internet? Well, the internet wasn’t created by a single individual. Many computer scientists and engineers made their contributions, which not only made the development of the Internet possible.

So, the invention of the Internet was a collective effort. And those who made this dream come through were motivated by the development of networking technology.

Now, who are the Internet’s founding fathers, and what role did they play? Here are the list of people that made the creation of the internet possible.

Paul Baran:

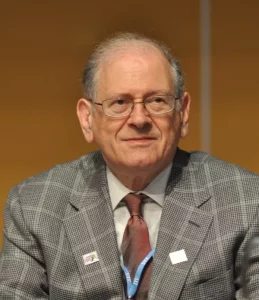

Paul, a Polish-American engineer, is a pioneer regarding computer networks’ development. While at RAND Corporation, Paul Baran developed a keen interest in the survivability of communication networks if a nuclear war had taken place.

So, his work overlapped ARPA’s. He joined the American think tank called RAND in 1959, where he made several exploits. There, Paul was saddled with the responsibility of helping the United States Air Force maintain control of its fleets if a nuclear attack ever took place.

In 1964, Paul Baran made a contribution that transformed communication networks. He called it the “distributed network.” The beauty of this network is that it had no central command point. Thus, if a specific point gets destroyed, communication won’t seize. The other surviving points would be able to communicate as usual.

Lawrence Roberts:

Dr. Robert received the prestigious Draper prize in 2001. He is awarded for his transformative contribution to the development of the internet.

Dr. Robert was a chief scientist at ARPA. There, he designed and managed the ARPANET, the first packet network.

He not only designed the ARPANET. He was also heavily involved in its implementation, which took place in 1969.

He’s also credited with being the brain behind packet switching, a technology that breaks data into discrete bundles, sends them in various paths around a network, and causes them to reassemble at the final destination.

Under Dr. Robert, packet switching was the underlying technology of the ARPANET, a technology central to the Internet.

He created the network allowing the distribution of control

Leonard Kleinrock:

Leonard Kleinrock is another Internet Hall of Famer you cannot leave out when mentioning people that created the internet. The American computer scientists developed the mathematical principle that’s behind packet switching.

Historically, Leonard Kleinrock was the first person to send a message between two computers on a network.

He has received several awards in his lifetime. He got the Charles’ Dark Draper Prize in 2001 and the National Medal of Science award in 2007.

Donald Davies:

Donald Davies coined the term “packet” and contributed massively to packet switching’s development. His work on packet-switched data communication influenced the development of the internet heavily.

Davies and his team explored packet switching widely. He also elaborated on the importance of packet switching to computer communications.

Unfortunately, he couldn’t convince the British to invest their money on an experiment centered on the wide area network. But his work in packet-switching was a game changer.

Davies received the British Computer Society Award back in 1974, and he has written several books about computer networks. In 1973, he wrote the book, Communication Networks for Computers. In 1979 and 1984, he wrote two books, Computer Networks and their Protocols and Security for Computer Networks, respectively.

Bob Khan And Vinton Cerf:

Khan and Cerf’s are massive contributors to the development and smooth running of the internet. They invented the TCP/IP protocols, a software code that dictates how data should move via a network.

The creation of the TCP/IP enabled the ARPANET to evolve into what we call the Internet today.

Historically, Vinton Cerf was the first person to use the term “internet.”

Bob Khan successfully conducted a demonstration of the ARPANET by linking twenty different computers. He made the demonstration during the International Computer Communications Network. Khan’s demonstration also opened the eyes of others to the relevance of the packet switching technology.

During his spell as Director of the Information Processing Techniques Office at DARPA, Bob Khan initiated a multi-billion dollar Strategic Computing Program belonging to the United States government.

The program was the biggest computer research and development program the United States government has ever funded.

In 1997, Bob Khan and Vinton Cerf received an award for their role in the Internet’s invention. U.S. President Bill Clinton gave both men the National Medal of Technology Award. President George Bush also gave Khan the Presidential Medal of Freedom award.

Khan and Cerf invented the TCP/IP in 1973. It allows data to flow between two computers.

The TCP means Transmission Control Protocol. IP refers to Internet Protocol.

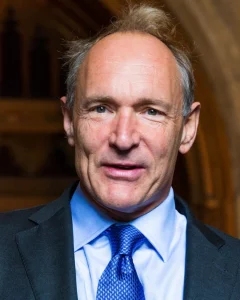

Tim Berners-Lee:

Tim Berners-Lee is another Internet Hall of Famer. His contribution to the development of the Internet is remarkable.

Tim Berners-Lee invented the World Wide Web in 1989. Then, he was still at the CERN’s European Particle Physics Laboratory. He had his first server and web client written in 1990.

The development of the World Wide Web wasn’t Tim’s only contribution. He also developed the HTML, URL, HTTPS, and web browsers.

Tim is the director of the World Wide Web Consortium, a Web standards organization established in 1994. The organization’s aim is to provide technologies (tools, software, guidelines, and specifications) that would enable the Internet to actualize its full potential.

He has also won several awards in his lifetime. He received the IEEE Computer Society Award in 1996, a Royal Medal in 2000, Japan and the Marconi Prize in 2002, Millennium Technology Prize in 2004, President’s Medal in 2006, and many more.

Paul Mockapetris:

Paul is an American computer scientist, credited with making the smooth running of the internet a reality. He made life easier for internet users.

Paul, together with Jon Postel and Craig Partridge developed the DNS (Domain Name System) in 1983. DNS creates identifiable names for every IP address instead of looking up hostnames.

Mockapetris has contributed greatly to the evolution of the Internet, both in industry capacity and research. He also made remarkable contributions to the development of LAN technologies and distributed systems.

Paul Mockapetris even served as program manager for networking at ARPANET back then.

The internet pioneer has picked up several awards for his contribution to the Internet’s evolution. In 2003, he received the IEEE Internet Award and SIGCOM Award for Lifetime Contribution to the field of communication networks.

Marc Andreessen:

Marc Andreessen is another famous inventor and contributor to the Internet’s growth.

He’s a software engineer, investor, and co-author of Mosaic, the first widely known web browser.

Invention Of The Internet In Phases

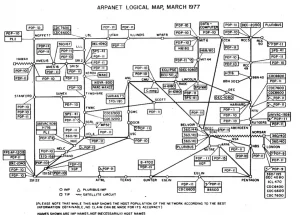

ARPANET. First network to run on the packet switching technology

Have you ever wondered how the idea behind the internet was conceived and how it was created? Many engineers and computer scientists played a huge role in the Internet’s invention. But how did it happen?

Here, we broke down the series of events that took place, leading to the Internet’s creation.

The J.C.R Licklider’s Networking Concept:

In August 1962, MIT’s J.C.R Licklider gave details of his “Galactic Network” concept. It was the first social interaction via networking and paved the way for the creation of the Internet.

In a series of memos, Licklider explained how people from different parts of the globe could access data and programs via interconnected computers.

He believed people didn’t have to visit a specific location where a computer is to gain access to data. They can do it from different locations.

President Dwight D. Eisenhower created DARPA (Defense Advanced Research Projects Agency) to develop emerging technologies for the U.S. military’s use.

Until this day, the Economists regard DARPA as the agency that shaped modern technology. And in all honesty, DARPA can take full or partial credit for a range of inventions. These include stealth technologies, GPS, voice interfaces, weather satellites, personal computers, drones, and the Internet.

Let’s further discuss Licklider’s idea as shared in his memos. J.C.R Licklider was the first Computer Research Program head at DARPA in 1962. And while working at DARPA, Licklider sold his networking concept to his successors – Ivan Sutherland, Lawrence Roberts (A famous MIIT researcher), and Bob Taylor.

Leonard Kleinrock Packet Switching Theory:

The concept of “packet switching” involves sending data in smaller pieces via various networks. And this process of sending data makes the transfer quicker and more efficient.

Leonard Kleinrock’s invention of the packet theory opened the door and made achieving Licklider’s networking concept possible.

In 1961, Kleinrock published his first paper on the packet switching theory and published a book on the subject in 1964. He famously developed the mathematical concept behind packet switching.

Kleinrock convinced Lawrence Roberts that communication via packets instead of circuits was feasible, a development that was vital to computer networking’s advancement.

Lawrence Roberts’ desire to make computers interact:

The next big step in computer networking at this point was making computers talk to themselves. Lawrence Roberts knew he had to make this happen and needed support. So, in 1965, he invited Thomas Merrill to lend a helping hand.

He and Thomas Merrill had the TX-2 computer linked in mass to the Q-32 in California. They made the first Wide Area Network happen using a low-speed dial-up telephone line, the first of its kind.

Roberts’s experiment came out positive. It is fair to say it was a success. It indicated that time-shared computers could work together efficiently, retrieving data and running programs on the remote machine.

Roberts’ experiment experienced a drawback, though Kleinrock’s packet switching concept became more important. Roberts and Thomas Merrill discovered that a circuit-switched telephone system wasn’t the right system for the job.

What did this imply? Kleinrock’s packet switching concept became well-appreciated. The researchers needed an answer and they were fully convinced that the circuit wasn’t the way forward. Now, here’s the next phase in the Internet’s creation.

Video showing the first two computers were connected.

Lawrence Roberts’ plan for the ARPANET:

In 1966, Lawrence Roberts moved to DARPA to kick-start the development of the computer network. In 1967, he published his plans for the ARPANET.

However, Roberts was surprised to find works on the packet network concept at the conference where he presented his paper. The concepts were from UK-based scientists Donald Davis and Roger Scantlebury from the NPL network.

Scantlebury also pointed out the same packet-switching concept was worked on by Paul Baran and other researchers at RAND.

The group in RAND had even written a paper detailing the packet switching networks on secure voice. They wrote the paper for the military in 1964.

Surprisingly, the work on the “packet switching” concept at MIIT from 1961 to 1967, RAND Corporation from 1962 to 1965, and the NPL network from 1964 to 1967 happened simultaneously without any researchers knowing. It is surprising how they all worked on the same concept, independent of each other.

The term “packet” was adopted from NPL’s work, and the proposed line speed deployed in the ARPANET’s design was ramped up to 50 kbps from the previous 2.4 kbps.

DARPA released RFQ for interface message precursors’ development:

By August 1968, the DARPA-funded community and Lawrence Roberts had refined APARNET’s specification and structure. However, there was a need to develop one crucial component, the packet switches known as Interface Message Precursors (IMPs).

The IMP’s development spurred DARPA to release the RFQ (Request for quote), urging individuals and groups to register their interests to take on the research and developmental project.

Finally, a group headed by Frank Heart at Bolt Beranek and Newman (BBN) won DARPA’s RFQ in December 1968. The group started developing the IMPs, with the internet pioneer Bob Kahn playing a crucial role in ARPANET’s overall architectural design.

However, Lawrence Roberts and Howard Frank, together with his team, designed and optimized the economics and topology of the network. But Kleinrock’s team at UCLA prepared the network measurement system.

Kleinrock’s UCLA Network Measurement Center becomes the first node on the ARPANET:

Kleinrock’s involvement in developing packet switching theory, including his attention on design, analysis, and measurement, made DARPA consider his Network Measurement Center as the ARPANET’s first node.

However, all these took place in 1969. And this was a period when BBN (Bolt Beranek and Newman) had developed the first-ever IMP, and the first computer was already connected.

Doug Engelbart’s project provided the second node for the ARPANET, and it was focused on the “Augmentation of Human Intellect,” conducted at Stanford Research Institute (SRI). Surprisingly, members of the institute still lend their support to the Network Information Center, headed by Elizabeth (Jake) Feinler. The center had critical functions like keeping a record of hostname to address mapping, including the RCF’s directory.

A month later, after SRI had been connected to ARPANET, Kleinrock sent the first host-to-host message from his laboratory to them. Then two more nodes got added at the University of Utah and UC Santa Barbara.

Excitingly, it was on record that the last two nodes added incorporated application visualization projects, and Glen Culler and Burton Fried at the University of California, Santa Barbara’s investigating methods for displaying mathematical functions via the use of storage display. The aim was to tackle issues of refresh over the net.

Robert Taylor and the American computer scientist Ivan Edward Sutherland at Utah investigated the methods of 3-D representations over the Internet.

The budding Internet gets off the ground:

History shows that 1969 was a crucial year regarding the Internet’s creation and it was the year the Internet got off the ground.

How? In 1969, four host computers got linked together into the former ARPANET, the beginning of something huge for the researchers.

However, note that even during this early stage, the networking research still incorporated the underlying network’s work, including how to use the network. And that tradition is still very much in use even till this present day.

This breakthrough motivated researchers to link more computers to the ARPANET quickly. Work to build a Host-to-Host protocol and other key network software started.

In 1970, the initial ARPANET Host-to-Host protocol came onboard. It was called the NCP (Network Control Protocol) built by the Network Working Group (NCG), headed by Stephen D. Crocker.

This development by the NWG enabled the ARPANET sites to implement the NCP from 1971 to 1972 completely. And following the NCP’s implementation, network users were now capable of developing applications.

Bob Kahn showcasing the ARPANET at an international conference for the first time:

The public had their first knowledge of the ARPANET and its capabilities in October 1972, thanks to Bob Kahn’s successful exhibition.

During the International Computer Communication Conference (ICCC), Kahn organized a large demonstration of the ARPANET where he linked 20 different computers together. His demonstration also showed the relevance of packet switching.

In 1972, there was an introduction of the initial “hot” app, electronic mail. In March of the same year, BBN’s Raymond Tomlinson developed the basic email message send and read program. His motivation for creating the software was to ensure easy coordination between ARPANET developers.

In July 1972, Lawrence Roberts saw the need to expand Raymond’s email message send and read program utility. Roberts wrote his first email utility to perform diverse operations. These include the option to list, file, selectively read, forward, and even respond to email messages.

With Roberts’ contribution, the email became the biggest network application for more than a decade.

The Initial Internetting Ideas

The ARPANET is the Internet’s precursor. Everything about the Internet revolves around it.

What idea was the Internet based on? The idea was that diverse independent networks of rather random design would exist. And this design would begin with the ARPANET standing as the pioneering packet switching network.

However, this wasn’t the end. Soon, there would be packet satellite networks, including ground-based packet radio and other networks. There’s a crucial underlying technical idea behind the Internet, including open-architecture networking.

In this approach, you could see that the choice of individual network technologies wasn’t dictated by specific network architecture. Instead, it could be picked up freely by any provider and then positioned to interwork with other networks via a meta-level “Interworking Architecture.”

Until that period, only one way of federating networks existed. And it was the traditional circuit switching method. In this method, networks were interconnected at the circuit level. They passed individual bits on a synchronized basis along parts of an end-to-end circuit existing between a pair of end locations.

It was glaring that the traditional circuit switching won’t provide the needed solution. And thankfully, Kleinrock had already provided a more effective solution.

In 1961, as earlier explained, Kleinrock had demonstrated that packet switching was a more effective switching method. And together with packet switching, another possibility was special purpose interconnection arrangements organized between networks.

However, other ways to interconnect different networks existed, but they had limitations. One of the networks had to be used as the other’s component instead of operating as a peer in providing end-to-end service.

Now, this is what is expected to happen in an open-architecture network. Each network is designed and developed separately. So, there’s a possibility that each of them may have a special interface, which users and providers may have to do with.

Furthermore, there’s also a chance the network will be designed and developed according to users’ requirements and the specific environment. But there aren’t constraints regarding the type of network to include or their geographical scope. Nevertheless, some sensible considerations will help in dictating what is worth offering.

Bob Kahn, the co-author of the TCP/IP protocol, was the first person to introduce the open-architecture networking idea. And that happened in 1972, the moment he became a DARPA member.

Originally, this work was among the packet radio programs. But as time progressed, it became a separate program. During that period, the program’s name was “Internetting.”

A reliable end-to-end protocol made it possible for the packet radio system to function well. The unique thing about this protocol was that it could remain effective despite constant jamming and other radio interferences that could have made it impossible to function properly.

Additionally, the end-to-end protocol could withstand intermittent blackout, normally caused by its location in a tunnel or a local terrain blockage.

Another interesting thing is that Bob Kahn had the mind of building a protocol local only to the packet radio network. He contemplated this to prevent dealing with a range of different operating systems and continued dependence on NCP (Network Control Protocol).

Unfortunately, NCP couldn’t address networks, including machines downstream, a destination IMP on the ARPANET. Nevertheless, some change to NCP would be needed.

Shockingly, there was an assumption regarding ARPANET. Some researchers were convinced that ARPANET was unchangeable in this regard. The NCP was fully reliant on ARPANET to offer end-to-end reliability. Thus, if there were a situation of a lost packet, the protocol and presumably, supported applications would come to a halt.

It was surprising to learn that NCP had no end-to-end host error control in this model. The ARPANET was the only existing network and had to be reliable to the point where the hosts won’t require error control.

Now, this is where Bob Kahn stepped in and provided a solution that revolutionized the Internet. He developed the TCP/IP, which refers to the Transmission Control Protocol/Internet Protocol.

The TCP/IP became the latest version of a protocol that met the open-architecture network environment requirements. While the NCP acted like the device driver, Kahn’s new protocols, TCP/IP, were more like the communications protocol.

However, Bob Kahn and Vinton Cerf created the protocols together while working as a professor at Stanford University. The protocols make it possible for data to move between two computers.

Ground Rules Crucial To Bob Kahn’s Early Thinking

Bob Kahn’s protocols, the TCP/IP, contributed majorly to the Internet’s development. But then, what rules were vital to Kahn’s early thinking that gave birth to massive inventions that helped the Internet’s development become a reality? Let’s discuss four of these rules.

Rule#1: Individual networks have to stand independently and won’t require internal changes to connect to the Internet.

Rule#2: Communications would occur on a best effort basis. If any packet fails to reach its final destination, it has to be retransmitted from its primary source shortly.

Rule #3: The networks would be connected by black boxes, but they would later be called routers and gateways. However, the gateways won’t retain any information on the individual packets flowing through them, thereby helping them to remain simple and prevent complicated adaption, including recovery from diverse failure modes.

Rule #4: There won’t be any global control even at the operations level.

Now, were there issues that needed attention? Yes, several issues crept up and posed a threat to the Internet’s development.

Let’s discuss some of the crucial issues that need urgent intervention.

Issue#1: There was a need for algorithms to make sure that lost packets don’t disable communications permanently, but are empowered to retransmit them from the primary source successfully.

Issue#2: Making provision for a host-to-host pipeline to ensure multiple packets could be en route to their destination from the source at the participating host’s discretion if permitted by the intermediate networks.

Issue#3: Gateway functions were necessary to forward packets the right way. This included IP headers interpretation for routing, handling interfaces, including breaking down packets into chunks, if necessary, etc.

Issue#4: There was a need for end-to-end checksums packets reassembly from fragments, including duplicate detection, if any.

Issue#5: There was a requirement for global addressing.

Issue#6: Host-to-host flow control was an issue, and techniques to do it appropriately, was on the list of issues that needed addressing.

Issue#7: Interfacing with diverse operating systems.

Issue#8: Other concerns raised include implementation efficiency and internetwork performance. But at the beginning, these were just secondary considerations.

The Result Of Bob Kahn And Vinton Cerf’s Collaboration

TCP Explained Video

While at BBN (Bolt Beranek and Newman), Kahn started working on operating system principles that are communications-oriented. He even documented most of his early thoughts in a BBN memo, which he titled “Communications Principles for Operating Systems.”

Excitingly, Kahn soon realized the importance of learning and understanding each operating system’s implementation details. He believed that would give him a chance to embed new protocols efficiently.

In 1973, Kahn convinced Vinton Cert, a professor at Stanford University, to lend a helping hand. But that was after he had begun his internetting effort.

Kahn wanted Cerf to join him in creating the protocol’s design, which he wholeheartedly accepted. Cerf was already at a good place then and had the knowledge Kahn was seeking. He had already been involved in NCP’s design and development. Additionally, Cerf already had firsthand experience and interfacing with an existing operating system.

So, with Cerf’s vast experience from NCP and Kahn’s communications architecture approach, both scientists managed to create something that shaped the Internet. They were able to team up and use their knowledge to create details of the TCP/IP protocols.

The cooperation between Kahn and Cerf was highly productive. And they distributed their first written version at a special meeting of the INWG (International Network Working Group), set up at a special conference organized in 1973 at Sussex University.

Vinton Cerf was invited and asked to chair the group and used the opportunity to organize a meeting with INWG’s members, who were also heavily represented.

Bob Kahn and Cerf’s collaboration produced some basic approaches worth considering. Let’s discuss them in detail.

#1: For communication between two processes to occur, there must be a long stream of bytes, which they described as octets. You can identify the octet by the position they occupy in the stream.

#2: Flow control is only possible via sliding windows and acknowledgments (acks). The destination has the option to choose when to acknowledge. Again, returned acks would be cumulative for all packets gotten up to that point.

#3: How the source and destination decide on the windowing parameters to use was open.

#4: Though the Ethernet’s development occurred at Xerox PARC then, LANs’ proliferation wasn’t envisioned, with much fewer workstations and even PCs. The initial model then was national-level networks such as ARPANET, and it was expected that only a small number would exist.

Therefore, a 32 bit IP address was utilized. But then, the first 8 bits indicated the network, while what was left (24 bits) designated the network’s host.

So, the popular assumption that the 256 networks would be enough for the foreseeable future needed reconsideration as LANs started appearing in the late 1970s.

When you look at Kahn/Cerf’s original paper dedicated to the Internet, you’ll discover that they only described one protocol – the TCP. It was the protocol responsible for providing all the Internet’s transportation and forwarding services.

In Kahn’s mind, he wanted the TCP protocol to offer support for a myriad of transport services, away from the virtual circuit model, a completely reliable sequenced delivery data to a datagram service, whereby the application used the underlying network service directly. But then, there could be occasionally lost and reordered or corrupted packets.

The initial effort on TCP’s implementation turned into a version, which only permitted virtual circuits. Nevertheless, the model was efficient for remote login and file transfer. Still, some of the earliest work on advanced network applications (An example is the 1970s packet voice) made it clear that TCP shouldn’t correct some packet losses. Rather, the application should deal with some of these losses.

So, this opened Kahn’s eyes to reorganize the original TCP, splitting it into two protocols. These include the TCP and IP. The IP (Internet Protocol) was provided to only address and forward individual packets, while the TCP’s basic concern was with service features like flow control, including the recovery from lost packets.

Some applications didn’t want the TCP’s services, but their needs were considered. An alternative known as UDP (User Datagram Protocol) was included to offer direct access to the basic service of the Internet Protocol.

However, resource sharing was the primary motivation for ARPANET and the Internet. For example, users were allowed on the packet radio networks and were able to gain access to ARPANET -attached time-sharing systems. Having both connected was much more economical than duplicating these highly expensive computers.

However, while remote login and file-sharing (Telnet) were crucial applications then, electronic email had already made a much bigger impact during that era. The email was a bigger and more successful innovation that shaped how people communicated back then.

The email did not only offer a new model of communication. It changed how researchers collaborated, first in the development of the Internet and later on, society.

Other applications were proposed during the Internet’s early days. These include the Internet telephony precursor (packet voice communication), diverse models of disk and file sharing, viruses, and early “worm” programs, which displayed the concept of agents.

The Internet’s key concept is that it wasn’t designed and developed for only one application but a general infrastructure upon which new applications can emerge. The World Wide Web’s emergence is a clear example of why the Internet is called a general infrastructure.

A Handy Tip: The Internet is a general infrastructure, thanks to the general-purpose nature of the TCP and IP’s service.

Proving The Concept Right

At this point, DARPA had acknowledged the TCP/IP’s relevance and wanted them implemented. The agency had to assign three contracts to three notable computer scientists to make this happen.

One of the contracts went to Vinton Cerf (professor at Stanford University), the TCP/IP co-developer. Another contract went to BBN’s Raymond Samuel Tomlinson, a computer programmer, and inventor of the ARPANET system’s first email program. Finally, the third contract went to UCL’s Peter Kirstein, a British computer scientist. The major aim of the contract was the TCP/IP’s implementation.

A Handy Tip: Bob Kahn and Vinton Cerf used the term TCP in their paper, but it contained and described both components (TCP and IP).

Now, let’s discuss how the team performed.

Vinton Cerf headed the Stanford University team. Excitingly, he and his team produced a detailed specification. Within one year, the TCP recorded three independent implementations that could interoperate.

This outcome marked the start of long-term experimentation and developmental processes that evolve and mature technology and the Internet concepts. Starting with the first three crucial networks (ARPANET, Packet Radio, and Satellite), including their original research communities, the experimental environment has expanded to admit every network form, broad-based research, and development community.

However, these expansions didn’t provide a quick route to solving the various problems faced. Instead, they created more challenges.

TCP’s early implementations were implemented for TOPS-20 AND Tenex, two large time-sharing systems. When desktop computers came on board, most people thought the TCP was too complex and big to run on a personal computer. But David Clark and his MIIT group of researchers displayed a simple and compact implementation of the TCP could happen.

David and his team first created an implementation for the Xerox Alto and then the IBM PC. Xerox Alto is the early personal workstation Xerox PARC developed). Interestingly, the implementation was completely interoperable with several other TCP protocols. But it was designed for the personal computer’s performance objectives and application suite. It also made known that large time-sharing systems and workstations could be part and parcel of the Internet.

Kleinrock published his first book written specifically on ARPANET in 1976. In the book, he emphasized protocol’s complexity and pitfalls they usually introduce. Kleinrock’s book was quite influential in spreading knowledge about pack switching networks to a broader community.

In the 1980s, there was widespread development of PCs, LANs, and workstations. And all these enabled the Internet to flourish. Xerox PARC’s Bob Metcalfe developed the Ethernet technology in 1973, which is now a dominant network technology in PCs, workstations, and the Internet, as a whole.

The shift from the status quo from having only a handful of networks and time-shared hosts (how the initial ARPANET model was) to having multiple networks initiated a range of new changes and concepts to the underlying technology.

Firstly, it caused three network classes definitions (A, B, and C) to accommodate the myriad of networks. Each class represented something. Class A was the large national scale networks, which included many hosts but a smaller number of networks.

Class B represented regional scale networks, while Class C represented LANs (Local Area Networks). There were relatively fewer hosts in Class C but a larger number of networks. The increase in the Internet’s scale, coupled with its management issues, caused a major shift. But there was a need to make the network a breeze for people to use.

To simplify the network, hosts were given unique names, so there wasn’t any need for users to store the numeric addresses in their heads. But frankly, only a handful of hosts were originally available then. Thus, it was feasible and easier to record all the hosts, their addresses, and associated names in a single table. But this wasn’t feasible any longer, following the shift to having a massive number of independently developed and managed networks (For example, LANs).

So, with the simplicity of having addresses and associated names of hosts gone, a simpler way was proposed by Paul Mockapetris, another founding father of the Internet. Mockapetris invented the DNS (Domain Naming System) in 1983.

The DNS creates unique identifiable names for each IP address, making the Internet more accessible for everyday use. An example is www.Americanewsreport.com. You can see how simple and unique the name is. Additionally, it is easy to memorize and remember.

However, as the Internet’s size increased, routers’ capabilities were challenged. Originally, routers had a single distributed algorithm implemented uniformly by all the routers present on the Internet. But then, as the number of networks the Internet had kept exploding, the initial routing design didn’t expand, as it should.

The initial design had to be replaced with something much better and capable of running smoothly in the wake of the internet network’s expansion. Thus, a hierarchical routing model was created to replace the initial routing. An Interior Gateway Protocol (IGP) was also used inside each region the Internet had. In the same vein, an EGP (Exterior Gateway Protocol) was deployed to tie each Internet region together.

This device opened the door to new possibilities. It allowed different regions on the Internet to utilize different IGPs. The idea was to accommodate different requirements for rapid configuration, cost, scale, and even robustness.

Besides the routing algorithm, the addressing table also stressed the routers’ capacity. However, new steps for address aggregation, particularly CIDR (Classless Inter-domain Routing), were made available to help control routing table sizes.

The Internet’s evolution exposed multiple challenges. One of them was how to initiate the changes to software, most especially the host software. DARPA helped fix this problem. The agency assisted UC Berkeley with the tools needed to investigate modifications to a new operating system, UNIX, and incorporate the TCP/IP created at the BBN.

However, UC Berkeley didn’t use the BBN code right away. The scholars there had to rewrite the code to fit into the UNIX operating system and kernel perfectly. And as requested by the DARPA, UC Berkeley incorporated the TCP/IP into the UNIX BSD system; an action that proved vital is dispersing both protocols to the research community.

Many computing system community professionals started using the UNIX BSD for their daily computing research tasks.

Now, when you go back in time, you’ll discover that Internet protocols incorporation into a supported operating system (OS) for the research community was a strategy that contributed to the Internet’s widespread adoption.

There was a critical but interesting challenge on January 1, 1983. It was based on the ARPANET host protocol’s transition from NCP to TCP/IP. It was more like a “flag-day” style kind of transition, which required all hosts to convert simultaneously and those who couldn’t communicate through the ad-hoc mechanism.

But then, this transition didn’t just happen. It was carefully planned and calculated for several years within the community. Thus, when it took place, the transition was quite smooth.

In 1980, the TCP/IP was the defense standard. This development allowed the defense to kick-start sharing in the DARPA Internet technology base. This development led to the partitioning of the community into military and non-military units.

By 1983, ARPANET’s use had become widespread. A huge number of operational organizations and defense R&Ds started using it. ARPANET’s transition to TCP/IP from NCP triggered its splitting into a MILNET supporting operational requirements, including ARPANET’s supporting research needs.

1985 was a good one for the Internet and its founding fathers. They witnessed their effort come to fruition. It is safe to say the Internet was a well-established technology in 1985. It started supporting a wider community consisting of developers and researchers. People belonging to other communities started using the Internet for their daily computer communications.

Electronic mail is another invention used broadly in different communities, boasting various systems. Interconnection between different mail systems showed the usefulness of broad-based electronic communications between individuals.

Transition To Widespread Infrastructure

The Internet technology, including the various components that made it a reality today, went through a series of transitions before becoming what we see today. The transition shaped the Internet; thus, they are noteworthy. The history of the Internet wouldn’t be complete without them.

So, researchers were also pursuing other networking and networking technologies while the experimental validation and widespread use of the Internet were taking place. Computer networking’s usefulness, especially electronic mail the Department of Defense contractors and DARPA demonstrated on the ARPANET, wasn’t lost on other disciplines and communities. So, computer networks started springing up wherever there was funding by the mid-1970s.

The US Department of Energy (DoE) founded MFENet to spearhead researchers on its Magnetic Fusion Energy. And it was on record that the High Energy Physicists from the DOE built HEPNet, thanks to the MFENet.

NASA’s space physicists were not left out. They reportedly developed SPAN. David Farber, Rick Adrion, and Larry Landweber also developed CSNET for the computer science community, both for industrial and academic use. They used grants obtained from the United States National Science Foundation (NSF).

Furthermore, AT&T’s free-wheeling UNIX operating system’s dissemination spawned USENET. And it was based on the UNIX operating system’s built-in UUCP communication protocols. In 1981, Greydon Freeman and Ira Fuchs joined forces to devise BITNET, a system responsible for linking academic mainframe computers, specifically in an “email as card images” pattern.

With USENET and BITNET’S exception, early networks, including ARPANET, were built for a purpose. In other words, there’s a reason behind their development, and they are restricted to closed communities composed of scholars. Thus, pressures for individual networks to become compatible weren’t high, and they largely weren’t even compatible.

Additionally, the commercial sector pursued alternative technologies, including Xerox’s XNS, DECNet, and SNA from IBM. The floor was opened by the United States’ program, NSFnet (1985), and British’s JANET (1984) to declare their intention to serve the whole higher education community, irrespective of discipline.

However, the United States universities have conditions to obtain NSF financial support for an internet connection. The condition was that all qualified users on the university campus must have access to the connection.

In 1985, it was on record that Dennis Jennings came to NSF to spend just a year. The Irish physicist is regarded as one of the Internet’s founders. He made three crucial decisions that shaped NSFNET’s development, the network that eventually transformed into the Internet.

Jennings joined forces with the community to assist NSF in making crucial decisions. One of those decisions is that the TCP/IP protocol would be crucial for the NSFNET.

In 1986, Stephen Wolff became the NSFNET program’s new head. Wolff quickly recognized how crucial a Wide Area Network (WAN) infrastructure is. His interest in WAN was to render the needed support to the general research and academic community.

Stephen Wolff also indicated creating a full-proof strategy for the infrastructure’s successful establishment based on the complete independence of direct federal funding. However, strategies and policies were adopted.

The NSF also took steps to render support to DARPA’s already existing Internet organizational structure, hierarchically organized under the Internet Activities Board (IAB) back then. The public declaration of this decision was based on the Architecture Task Forces, NSF’s Network Technical Advisory Group of RFC-985, and IAB’s Internet Engineering joint authorship.

Policies Made And Implemented By Federal Agencies That Shaped The Internet Of Today

TCP/IP protocol’s selection for the NSFNET program wasn’t the only thing federal agencies implemented. They also made and implemented several other key policies and decisions that shaped today’s Internet. Let’s discuss what those implemented policies were.

#1: Federal agencies shared common infrastructures’ costs, such as the trans-oceanic circuits. They also supported “managed interconnection points” used for interagency traffic. And it was for this purpose the Federal Internet Exchange (FIX-E and FIX-W) was built and served as models for Network Access Points, including “*IX” facilities, regarded as crucial features in today’s Internet architecture.

#2: To facilitate smooth coordination of this sharing, the Federal Networking Council (FNC) was created. The agency also joined forces with other international organizations like Europe-based RARE via the Coordinating Committee on International Research Networking (CCIRN). The aim of this collaboration was the coordination of Internet support of the research community around the world.

#3: The cooperation and sharing between agencies on issues relating to the Internet had a very long history. In 1981, there was an unprecedented agreement between the NSF, Farber, acting for CSnet, and DARPA’s Kahn. The agreement permitted the CSnet traffic to willingly share ARPANET’S infrastructure on a no-metered-settlements and statistical basis.

#4: Subsequently, but similarly, the NSF encouraged its regional (previously academic) networks of the NSFNET to look out for commercial, including non-academic customers, to boost facility expansion to serve them. The goal was also to take advantage of the economies of scale to reduce subscription costs for everyone.

#5: On the NSFNET’s Backbone – the NSFNET’s national-scale segment – Here, there was an “Acceptable Use Policy” (AUP) NSF enforced. The AUP was against Backbone usage for other purposes that don’t support Education and Research.

The intended and predictable outcome in encouraging commercial network traffic, both at local and regional levels, while preventing its access to national-scale transport, was for an obvious reason. And that reason was to stimulate competitive, private, and long-haul networks’ emergence and growth. These include UUNET, PSI, ANS CO+RE, and others that may spring up later.

Starting from 1988, NSF organized a series of conferences at the Kennedy School of Government at Harvard. The subject matter discussed included the Internet’s commercialization, and Privatization and the “com-priv” list on the Internet itself. They even thrashed out the privately-financed augmentation process for commercial uses too.

#6: Another historical event that took place in 1988. The National Research Council committee, with Kleinrock as the head, while Clark and Kahn are members, gave an NSF-commissioned report. The report’s title was “Towards a National Research Network.”

The report had such a massive influence on Senator Albert Arnold Gore back then. It is safe to say that the committee’s report brought high-speed networks, which laid a solid networking foundation for the future information superhighway.

#7: There was a National Research Council report again in 1994. Kleinrock was also the chairman, while Clark and Kahn were mere council members. The report’s title was “Realizing The Information Future: The Internet and Beyond.” NSF commissioned the report, a document from which the super-highway evolution’s blueprint emerged. The document anticipated crucial issues, such as ethics, intellectual property rights, education, pricing, architecture, including regulation for the Internet.

#8: There was an NSF privatization policy in April 1995, and it happened together with NSFNET’s Backbone defunding. Funds recovered were later redistributed to regional networks. The intent is to acquire national-scale Internet connectivity from the now diverse long-haul, private networks.

Another thing you need to know is that the Backbone had transited from a network built out of routers out of the highly effective research community to commercial equipment.

In its eight and half year lifetime, the Backbone had transformed severally. It grew from six nodes and 56 kbps links to 21 nodes and multiple 45 Mbps links. It has also witnessed the Internet increase by over 50,000 networks across all seven continents, including outer space.

Interestingly, the United States of America had approximately 29,000 networks. Now, you can see the weight the NSFNET program’s funding had. The program received approximately $200 million from 1986 to 1995.

The protocol’s quality themselves that by 1990 when ARPANET got decommissioned finally, TCP/IP had marginalized or even supplanted most of the wide-area computer network protocols across the globe, and IP was rising to the top to become the Global Information Infrastructure’s bearer service.

What Role Did Documentation Play?

The Internet grew rapidly because of the free and open access researchers had to basic documents, especially the protocols’ specifications.

ARPANET and the Internet started in the university research communities encouraged the open publication of ideas and results tradition. The usual traditional academic publication cycle was too formal and slow for the dynamic exchange of ideas considered crucial to creating networks.

However, Stephen D. Crocker, back then at UCLA, took a critical step in 1969. He established the RCF (Request for Comments series of notes. The memos are intended to offer an informal and quick way that information can be shared amongst network researchers.

In the beginning, the RCFs were printed on paper and shared through snail email. But things changed when the File Transfer Protocol (FTP) became available for use. It marked the beginning of the RFCs’ preparation as online files, and researchers could access the files via FTP. Accessing the RFCs via the World Wide Web at various websites across the globe is now a breeze. And SRI, the Network Information Center, maintained the RCFs’ online directories.

Jon Postel functioned as the RFC’s editor and even managed the centralized administration of the required protocol’s number of assignments. He played both roles until his demise on October 16, 1998.

The RFCs’ effect was the creation of a positive feedback loop, where proposals and ideas would be made available in one RFC, thereby triggering another RFC that had additional ideas, and so on. Specification documents are prepared when some consensus or consistent ideas come together. The different research teams will have to use the prepared specification for implementations.

The RFCs have changed over time. They now pay more attention to protocol standards (the “official specifications). But then, informational RFCs that render background information on engineering and protocols issues, including describing alternative approaches, still exist. Researchers now view the RFCs as the “documents of records” in the Internet standards and engineering community.

So, the RFCs have open access. It is free for anyone that has a connection to the Internet. The RFCs’ open access promotes the Internet’s growth. How? It gives room for the actual specifications’ use, for example, in college classes, including entrepreneurs working to develop new systems.

Email is a crucial factor in every aspect of the Internet. It is evident in the protocol specifications development, Internet engineering, and technical standards. RFCs at the early stage often offered a set of ideas put forward by researchers at a certain location for the entire community to access.

But the emergence of email changed everything. It changed the authorship pattern. Joint authors now started presenting RFCs with views independent of their locations.

Specialized email mailing lists have been in use for a long time. It was useful in protocol specifications development and continues to be a vital tool.

In the IETF (Internet Engineering Task Force), there are over 75 working groups. And each of these groups is working in a separate area of Internet engineering. Additionally, they boast a mailing list to discuss draft documents under development. In the same vein, when they reach a consensus on any draft document, it could be distributed as an RFC.

The Internet’s rapid expansion is fueled by its capacity to promote information sharing. But we shouldn’t forget that the network’s primary role in information sharing entails disseminating information regarding its design and operation via the RFC documents. And these new techniques for evolving new capabilities in the network remain crucial to the Internet’s future evolution.

The Creation Of A Wider Community

The Internet is a collection of technologies and a collection of communities. The Internet’s success is also rooted in its ability to satisfy basic community needs and utilize the community in the most effective way to push its infrastructure forward.

This community spirit started a long time, even in the days of the ARPANET. ARPANET researchers worked as one community to achieve the packet switching technology initial demonstrations described earlier.

In the same vein, Packet Radio, Packet Satellite, and other DARPA computer science-based research programs were multi-contractor collaborative activities. And these activities heavily relied on available mechanisms to coordinate their efforts, beginning with electronic mail, file sharing remote access, and the World Wide Web capabilities.

It was evident that these programs developed into a working group and began with the ARPANET Network Working Group. And because of APARTNET’s unique role as an infrastructure that supported diverse research programs, the Network Group changed to Internet Working Group as the Internet started evolving.

In the 1970s, Vinton Cerf recognized the Internet’s growth, accompanied by growth in the research community’s size and a need for coordination mechanisms. Back then, he was DARPA’s Internet Program’s manager. The need for coordination prompted Cerf to form several coordination bodies.

The bodies include the ICB (International Corporation Board), chaired by UCL’s Peter Kirstein. The body’s primary aim was coordinating activities with some cooperating European countries on Packet Satellite research. It was an inclusive Internet Research Group, which provides the ideal environment for general information exchange.

The ICCB (Internet Configuration Control Board) was also another group chaired by Clark. What was the ICCB’s role? Their primary duty entails assisting Vinton Cerf in managing the ever-increasing Internet activity.

Barry Leiner took over as head of DARPA’s Internet research program in 1983. When he assumed office, he and Clark quickly acknowledged that the Internet community’s steady growth showed the need to restructure the coordination mechanisms.

The ICCB disbanded, and something more reassuring was put in the body’s place. Task Forces were assigned to focus on different aspects of the technology, such as end-to-end protocols, routers, etc. The Task Forces chairs later created the IAB (Internet Activities Board).

Coincidentally, the Task Forces chairs were also old ICCB members. But Dave Clark remained the chair. The IAB Task Forces changed membership, and Phill Gross became the revitalized Internet Engineering Task Force (IETF) chair.

As we saw by 1985, the practical/engineering side of the Internet witnessed more growth. The growth positively impacted the IETF, as attendees to meetings skyrocketed. This growth pushed Phill Gross to form a substructure in the IETF, though as working groups.

However, a major expansion witnessed in the community complemented this growth. And DARPA was no longer the only player funding the Internet. NSFNET, several United States and international government-funded activities, joined those funding the Internet. Additionally, the commercial sector’s interest also started growing.

In 1985, Leiner and Kahn left DARPA, a move that reduced Internet activity at the agency. This made the IAB lose its primary sponsor and assume the leadership mantle.

However, the growth didn’t cease to progress. It continued and created more substructures within the IETF and IAB. The IETF even had to combine WGs (Working Groups) into areas with directors.

They also created the IESG (Internet Engineering Steering Group) from the Area Directors. Furthermore, the IAB recognized the IETF’s increasing relevance and had to restructure the IESG as the primary review body responsible for standards.

Again, the IAB had to restructure for other Task Forces besides IETF to come together to form the IRTF (Internet Research Task Force) with American computer scientist Jon Postel as chair. The older task forces were also renamed as research groups.

The commercial sector witnessed encouraging growth, though with a price. The growth caused an increased concern on the standards process itself. You’ll discover that the Internet grew massively from the early 1980s to this day. It grew beyond its basic research roots to include increased commercial activity and a broad user community.

However, calls to make the process open and fair intensified. And this, together with the obvious need for community support for the Internet, finally brought about the Internet Society’s creation in 1991. And this development took place under the guidance of Kahn’s (CNRI) Corporation for National Research Initiatives, including Cerf’s leadership, though then with CNRI.

Another re-organization happened in 1992. The Internet Activities Board and Internet Architecture Board organized operations under the Internet Society’s umbrella. Between the new IESG and IAB, a more “peer” relationship was defined. But the IESG and IETF took bigger responsibility regarding standards approval.

A more mutually supportive and cooperative relationship existed between the Internet Society, IETF, and IAB. But the provision of service, including other measures that would facilitate the IETF’s work, was the Internet Society’s goal.

Furthermore, the recent developments, including the World Wide Web’s widespread deployment, gave rise to a new community. The majority of those working on the World Wide Web didn’t view themselves as primarily network developers and researchers.

A new coordination organization called the World Wide Web Consortium (W3C). The W3C was initially led by the WWW developer, Tim Berners-Lee, from MIT’s Laboratory for Computer Science, including Albert Vezza.

The W3C took the responsibility of evolving the various standards and protocols associated with the web. It is the reason why over two decades of Internet activity, there has been a steady evolution of key organizational structures designed to not only support but facilitate the ever-growing research community working jointly on Internet issues.

How The Internet Technology Was Commercialized

The Internet’s commercialization entailed the development of private and competitive network services and commercial products that are helping to implement Internet Technology’s development.

When you look closely at the Internet’s history in the 1980s, you’ll see how several vendors had the TCP/IP protocol incorporated into their products. What was the reason for this action? It was simple. Buyers for the networking approach were available.

Unfortunately, things didn’t turn out as envisaged. They discovered they didn’t know how the network technology is expected to function, including how the customers planned to use this networking approach.

Surprisingly, many people started viewing the add-on as a nuisance that should be glued to their proprietary networking solutions, such as DECNET, SNA, NetBios, and Netware. The DoD had already made compulsory TCP/IP use in many of its purchases. However, it only gave the vendors little help in building useful TCP/IP products.

In 1985, Dan Lynch and the IAB made giant steps to help the situation after realizing the lack of information and training vendors were experiencing. Now, what step did they take? They had to organize a workshop for vendors on TCP/IP.

Lynch and the IAB made it a three-day workshop and urged all vendors to attend. They shared information on how TCP/IP operates during the training, including its drawbacks. The main speakers for the workshop were from DARPA and included people who either had a hand in its development or used the TCP/IP protocol for their day-to-day work.

Interestingly, approximately 250 vendors turned up for the training, including 50 experimenters and inventors. And both sides were impressed. The vendors were surprised to learn how things work and how open the inventors are. The inventors also discussed the drawbacks.

On the other hand, the inventors were happy to listen to vendors discuss issues observed that they hadn’t considered or even thought about. Hearing what the vendors had experienced in the field prompted a two-way discussion for a decade.

However, after two years of tutorials, conferences, and workshops, including design meetings, a special event was organized to bring vendors together. The vendors included those who ran the TCP/IP protocols well enough. They were all assembled in one room for a three days exhibition. The vendors showcased how well they worked together and ran over the Internet.

There was an Interop trade show in September 1988, and it was the first time such a show was organized. And 50 companies were selected. A whopping number of engineers, approximately 5,000 from potential customer organizations, were present too. Their motive for coming was to see if things did work as claimed.

Interestingly, it did work. Why? The credit goes to the vendors. They worked harder to make sure the products of everyone interoperated with the other products, including their competitors’.

The Interop trade show is still being organized and it has grown immensely. Now, it’s being organized in seven locations globally every year, and its audience has reached over 250,000 individuals. People come to the show to learn which products work together and discuss new products and technologies.

In line with the Internet’s commercialization effort, which the Interop activities highlighted, the vendors increased their interest in learning more about the TCP/IP protocol suite. They started attending the IETF’s meetings, which the agency holds 3 or 4 times yearly.

New ideas for the TCP/IP protocol suite’s extension are usually discussed in the IETF’s meetings. A few hundred government attendees, majorly from academia, often attended the meetings. But things changed drastically. Thousands of people started attending meetings from the vendor community, and they paid for it themselves.

Interestingly, this self-selected group slowly evolved the TCP/IP protocol suite in a mutually cooperative manner. And the reason such meetings are usually useful is because of the caliber of people present. They often include researchers, vendors, and even end-users.

Network management offers a good example of the interplay between commercial and research communities. In the Internet’s early days, the major emphasis was defining and implementing protocols that achieved interoperation.

As the network started growing bigger, it became obvious that ad hoc procedures for network management sometimes won’t scale. Thus, there was a replacement of manual configuration of tables with distributed automated algorithms, and there were much better tools devised to help isolate the fault.

Another historical event happened in 1987. It became obvious that a protocol capable of permitting a network’s element like routers to be managed remotely uniformly was required.

Thus, several protocols that could fulfill these needs were proposed. Examples include SNMP (Simple Network Management Protocol), a much earlier proposal known as SGMP, and simplicity design. CMIP (derived from the OSI community and HEMS) was a more complicated design provided by the research community.

However, HEMS wasn’t a success, even though it was considered. There were several meetings on HEMS, where a decision was reached to withdraw it as a standardization candidate.

On the other hand, proposed protocols, such as CMP and SNMP, went ahead. But the CMP was considered a more long-term approach, while SNMP was a more near-term solution. The market also has the free option to pick the most suitable one. Nevertheless, SNMP has now become a universal solution. It is now used almost everywhere around the globe for network-based management.

Now, if you have been paying close attention to the Internet’s commercialization in the last few years, you’ll understand that a new phase has emerged. Originally, commercial efforts were from vendors offering basic networking products, including service providers that offer connectivity and basic Internet services.

Today, the Internet has turned into a “commodity” service.” And most of the latest attention has been on the global information infrastructure’s use for other commercial services’ support. The World Wide Web technologies and browsers’ rapid and widespread adoption has accelerated it. It allowed users to access data linked throughout the globe easily.

There are available products to speed up the provisioning of that information. The latest technological developments have focused on increasingly dishing out sophisticated information services.

The History Of The Future

FNC (Federal Networking Council) passed a resolution unanimously on October 24, 1995. The resolution was to define the term “Internet.” The definition was also developed following a consultation with the Internet and intellectual property rights communities. RESOLUTION: The FNC agrees that the term “Internet” is defined by the following language.

According to the FNC and stakeholders, the term “Internet” would refer to a global information system that embodies the following.

1. Is linked together logically by a unique global address space based on the IP (Internet Protocol) or its subsequent extensions/follow-ons.

2. Can support communications using the TCP/IP suite or its subsequent extensions/follow ons and other protocols that are IP compatible.

3. Offers, uses or makes accessible, whether privately or publicly, high-level services that are layered on the communications, including the related infrastructure described herein.

The bottom line here is the Internet has undergone massive changes since it became available in the last two decades. It was a technology born in a time-sharing era, though it has managed to survive into the personal computers, peer-to-peer computing, client-server, and network computer era. It was developed before LANs existed but has surprisingly accommodated the new network technology including the ATM and the frame switched to services that are more recent.

It supported various functions ranging from file sharing, remote login, collaboration, and resource sharing. It has also spawned electronic mail and even the more recent World Wide Web (WWW).

But what’s most important is that it began as a small band of dedicated researcher’s creation, which grew massively to become a commercial success raking in billions of dollars annually via investment.

Now, has the Internet stopped evolving? No, it hasn’t. The Internet might be a network in geography and name, but it’s a computer creature, not the traditional network of the television and telephone industry. So, it must continue evolving to remain relevant.

The Internet is evolving in several ways, and no one can deny that. Its transformation can be seen in how it is trying to offer new services to users. An example is real-time transport to render support to video and audio streams.

The Internet’s availability coupled with powerful, affordable communications and computing considered super portable (computers, laptops, mobile devices, PDAs, and two-way pagers) makes computing and communications a possibility.

There are positives in these evolutions, as it could usher in Internet telephone and even Internet television. The Internet’s evolution will also permit concentrated forms of cost recoveries and pricing, which would be painful requirements in this commercial world.

The Internet’s evolution will accommodate a newer generation of network technologies with varied requirements and characteristics, e.g., satellites and broadband residential access. Newer forms of service and modes of access will also spawn new applications, a move that will further drive the net’s evolution massively.

There are also pressing questions for the Internet’s future. We seem to have a clear understanding of how technological change will occur.

How will the process of evolution and change experienced by the Internet be managed?

When you read through this article, you’ll discover that a group of designers had been driving the Internet’s architecture for a long. But it is now obvious that the number of interested parties has skyrocketed, a development that has changed the form of the group of core designers driving the Internet’s architecture.

The Internet’s success has increased the number of stakeholders. And these stakeholders may either have an intellectual or economic interest in the network.

In debates concerning domain name space and the next generation of IP addresses’ next form, we now see a challenge to find the next social structure capable of guiding the Internet in the future. And as things stand, it will be difficult to find the form of that structure. Why? The reason is the huge number of stakeholders.

The industry is also facing a challenge to find the economic rationale that would match the huge investment required for future growth. An example is upgrading residential access to a technology considered more suitable.

But the truth is, if the Internet eventually stumbles, it won’t happen because the technology wasn’t available. Technology won’t be the problem in any way. Instead, it would be because we lacked the motivation and vision to make it happen. The reason will be that we were unable to set a direction and collectively follow it through into the future.

How Important Is The Internet Today?

No one can argue that the Internet is one of the biggest and most significant inventions on the planet. It opened the door for growth and development in different sectors and aspects of our lives.

Now, let’s discuss some of the benefits of the Internet.

#1: Sharing, communication, and connectivity:

Communication between academics and researchers was challenging. Researchers had to visit places with computers to access data. This act limited corporations and made collaboration between researchers tougher than it should be.

But thanks to the Internet, researchers can interact and share data with their colleagues around the world.

Furthermore, the Internet has made communication between people a breeze. It also made communication via diverse means possible.

You can chat with your contacts or video-call them wherever they are on the planet. All you both require is internet connectivity.

#2: Information, learning, and knowledge:

The Internet has made it possible for people to share their knowledge, learn and even acquire knowledge on various subjects with ease. You can even ask questions and have an expert from around the world respond to them.

The Internet has also made life a breeze for students. There is information on almost every topic on the Internet. You can Google your question or topic of interest and see for yourself.

#3: Boost e-commerce:

The Internet opened doors to endless possibilities and opportunities for businesses. As long as you can get your business online and deploy effective marketing strategies, you can create brand awareness, increase your customer base and possibly hit your sales target.

The Internet has also made it possible to sell anything they deem fit. You don’t even need to have a physical store or company to sell online and make money doing so.

#4: Banking, shopping, and bills:

The Internet has made banking a breeze for customers and financial institutions. People can check their bank balances, transfer money, pay bills, and even chat to customer support without leaving their homes.

The Internet has also made shopping a breeze. You can be in the United States of America and purchase goods from China and other countries thousands of miles away.

#5: Entertainment:

The Internet has and will continue to have a massive impact on the entertainment industry. You can play video games online, listen to music, watch short movies, create videos, and download content from the Internet.

The Internet gives movie producers, singers, comedians, dancers, and professionals in the entertainment industry the platform to announce their projects to a wider audience.

#6: Access global workforce and work from home:

Today millions of people work from home, thanks to the Internet. It may not have been possible without the Internet.

Furthermore, the Internet offers companies the opportunities to access the global workforce and probably, hire the right people for different roles.

Conclusion

The Internet has a rich and interesting history. However, no single individual can claim they invented the Internet. A myriad of scientists and researchers made it happen.

The dedication of the Internet’s founders is something worth discussing. They worked tirelessly to ensure the Internet was a success.

Several agencies, groups, and research communities were created to oversee the development of the various components that led to the establishment of the Internet.